Big Data is evolving as a huge opportunity for institutions. Today, organizations have comprehended that they are attaining hordes of profits by Big Data Analytics. They are probing huge data sets to reveal all concealed patterns, strange correlations, market inclinations, customer preferences and additional advantageous business details.

These systematic and logical conclusions are supporting institutions in more successful and productive marketing, novel revenue prospects, improved customer service. They are augmenting functional productivity, competitive dominance over competing establishments and other professional paybacks.

So, let us go to the next stage and recognize the complications allied with outdated method in dealing with big data prospects.

Complications Associated with Traditional Approach

In the outdated strategy, the core problem was managing the conglomeration of data i.e., structured, semi-structured and unstructured. The Rational database management system (RDBMS) emphasizes typically on structured data such as banking transactions, operational data etc. Hadoop dedicate itself to semi-structured, unstructured data such as text, videos, audios, Facebook posts, logs, etc. RDBMS technology is an established, extremely reliable, matured systems reinforced by a number of corporations. Whereas, in contrast, Hadoop is marketable by reason of Big Data, which generally be made up of unstructured data in several set-ups.

Here and now, let us comprehend on what are the foremost glitches linked with Big Data. To facilitate, moving ahead we can comprehend in what manner Hadoop developed as a solution.

The first problem is storing the colossal amount of data.

Packing big data in an outdated arrangement is impracticable. The reason is apparent, the packing will be restricted solitarily to single system and the data is growing at an incredible proportion.

Second problem is storing heterogeneous data.

Today, we know storage of data is a big concern, nevertheless, it is just a portion of the huge issue. In the meantime, we are aware that the data is not only enormous, but it is additionally existing in numerous formats such as: Unstructured, Semi-structured and Structured. Therefore, you require to guarantee that, you have a structure to store all these variations of data, created by several sources.

Third problem is accessing and processing speed.

The capacity of the hard disk is mounting but the speed of disk transfer or the access speed is not increasing at analogous proportion. Let us have a look at an example to understand better: If you have only a single 100 Mbps I/O station and you are handing out a huge data of 1TB, it will consume approximately 2.91 hours. Whereas, if you have four gears with a single I/O channel, for the equivalent expanse of data, will take only 43 minutes approx. Therefore, retrieving and processing speed is the greater concern than storage of the Big Data.

Prior to heading on to comprehend on what is Hadoop? Let us first understand the complete history that is behind Hadoop, i.e., since the time it has launched till now.

History of Hadoop

The Hadoop was launched by Doug Cutting and Mike Cafarella in 2002. Its beginning was the Google File System paper, issued by Google.

History of Hadoop, is discussed below in a stepwise format, let’s quickly go through it:

In 2002, Apache Nutch was developed by Cutting and Mike Cafarella to function on a project. It is an open-source web crawler software project. Though while functioning on Apache Nutch, they were handling the enormous data. To cost involved in the of storage for these big data is huge, which eventually turns out to be the outcome of that project. This complication turns out to be one of the significant causes for the development of Hadoop.

In 2003, Google presented a file system acknowledged as GFS (Google file system). It is a proprietary distributed file system developed to provide efficient access to data.

In 2004, Google delivered a white paper on Map Reduce. This method makes things easier for the data processing on big clusters.

In 2005, Doug Cutting and Mike Cafarella offered an innovative file arrangement known as NDFS (Nutch Distributed File System). This file system similarly consists of map reduce.

In 2006, Doug Cutting vacate Google and combined Yahoo. On the source of the Nutch project, Dough Cutting presents an innovative scheme Hadoop with a file system that is acknowledged as HDFS (Hadoop Distributed File System). Hadoop primary version 0.1.0 released in this year. Doug Cutting has termed his project Hadoop on his son’s toy elephant.

In 2007, Yahoo runs two clusters’ of 1000 machines.

In 2008, Hadoop has turn out to be the fastest system to categorize 1 terabyte of data on a 900-node cluster within 209 seconds.

In 2013, Hadoop 2.2 version was released.

In 2017, Hadoop 3.0 version was released.

In 2018, Hadoop 3.1 version was released.

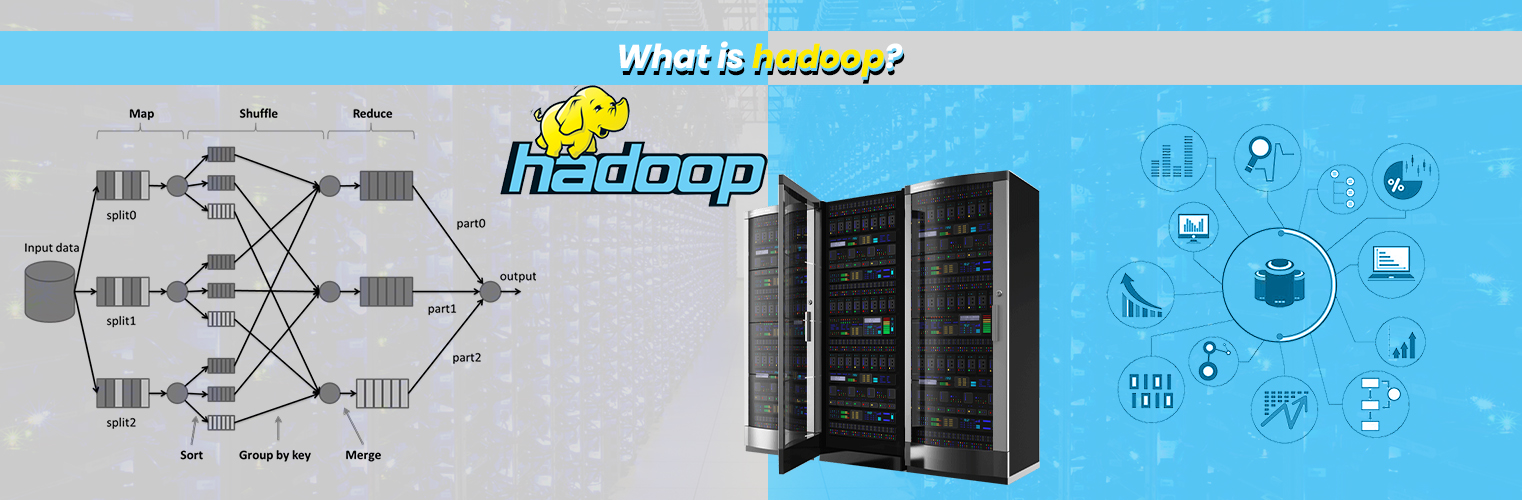

What is Hadoop?

Hadoop is an open-source outline from Apache and is used to store and analyse data process which are extremely gigantic in capacity. Hadoop is inscribed in Java and is not OLAP (online analytical processing). It is utilized for batch/offline methods. It is being employed by Facebook, Yahoo, Google, Twitter, LinkedIn and several more. Furthermore, it can be mounted up just by adding nodes in the cluster.

Modules of Hadoop

HDFS: Hadoop Distributed File System. Google issued its tabloid GFS and on the foundation of that HDFS was established. It states that the files will be fragmented into chunks and deposited in nodes on top of the scattered architecture.

Yarn: Thus far it’s an alternative Resource Negotiator that is employed for job forecast and administer the bunch.

Map Reduce: This is a skeleton that supports Java programs to do the corresponding calculation on data by means of key value pair. The Map task earns input data and translates it into a data set that can be figured in Key value pair. The output of Map task is expended by lesser task and then the out of reducer produce the anticipated outcome.

Hadoop Common: These Java libraries are employed to begin Hadoop and are used by supplementary Hadoop modules.

Hadoop Architecture

A package of the file process is known as the Hadoop architecture that consist of MapReduce engine and the HDFS (Hadoop Distributed File System). The MapReduce engine can be MapReduce/MR1 or YARN/MR2. On the other hand, a Hadoop process is made up of a solitary (master) leader and several strive (slave) nodes. The master node consists of Job Tracker, Task Tracker, NameNode, and DataNode however the slave node takes in DataNode and TaskTracker.

Hadoop Distributed File System

The Hadoop Distributed File System (HDFS) is a distributed file system for Hadoop. It comprises of a master/slave architecture. This architecture is made up of a solitary NameNode executes the function of master, and several DataNodes completes the part of a slave.

Play Videox

NameNode and DataNode both are sufficiently proficient to operate on commodity technologies. The Java language is employed to advance HDFS. Therefore, any engine that gives an edge to Java language and makes it operate easily the NameNode and DataNode software.

NameNode

It is an only master server exist in the HDFS cluster.

Being a single node, it might turn out to be the cause of single point failure.

It manages the file system namespace by implementing a process such as the opening, renaming and closing the files.

It makes things easier in architecture of the system.

DataNode

The HDFS cluster comprises of multiple DataNodes.

Respectively DataNode covers quite a lot of data blocks.

These data blocks are designed for storing data.

It is the accountability of DataNode to read and write requests from the file system’s customers.

It executes block creation, deletion, and replication upon guidelines from the NameNode.

Job Tracker

The function of Job Tracker is to agree to take the MapReduce jobs from customer and process the data by means of NameNode.

Cutting-edge response, NameNode offers metadata to Job Tracker.

Task Tracker

It operates as a slave node for Job Tracker.

It accepts task and code from Job Tracker and spread over that code on the file. This procedure can correspondingly be known as a Mapper.

MapReduce Layer

The MapReduce hails from into survival when the customer application succumbs the MapReduce profession to Job Tracker. In retort, the Job Tracker directs the demand to the suitable Task Trackers. Every now and then, the TaskTracker be unsuccessful or time out. In such circumstance, that portion of the career is rearranged.

Where is Hadoop used?

Hadoop is employed for:

Exploration – Yahoo, Amazon, Zvents

Log processing – Facebook, Yahoo

Data Warehouse – Facebook, AOL

Video and Image Analysis – New York Times, Eyealike

Up till now, we have perceived in what way Hadoop has made Big Data management possible. But then again there are certain circumstances where Hadoop operation is not suggested.

When not to use Hadoop?

Few scenarios here the Hadoop operation is not recommended are as follows:

Low Latency data access: Rapid access to minor fragments of data

Multiple data modification: Hadoop is a better suitable only if we are mainly anxious about data interpretation and not data modification.

Lots of small files: Hadoop is appropriate for scenarios, where there are lesser but bulkier files.

Also Read:- 10 Things You Should Know Before Buying Your First Crypto

Advantages of Hadoop

Fast: In HDFS the data scattered above the cluster and are charted which supports in more rapid retrieval. Even the gears to procedure the data are every so often on the equivalent servers, therefore dropping the processing time. It is equipped to progress terabytes of data in minutes and Peta bytes in hours.

Scalable: Hadoop cluster can be protracted by just accumulating nodes in the cluster.

Cost Effective: Hadoop is open-source and utilizes commodity hardware to protect data so it indeed lucrative in comparison to outdated interpersonal database management system.

Resilient to failure: HDFS has the features with which it can duplicate data above the network, so if single node is down or few additional network failures occur, then Hadoop takes the added replica of data and employ it. Generally, data are copied thrice but the duplication factor is configurable.